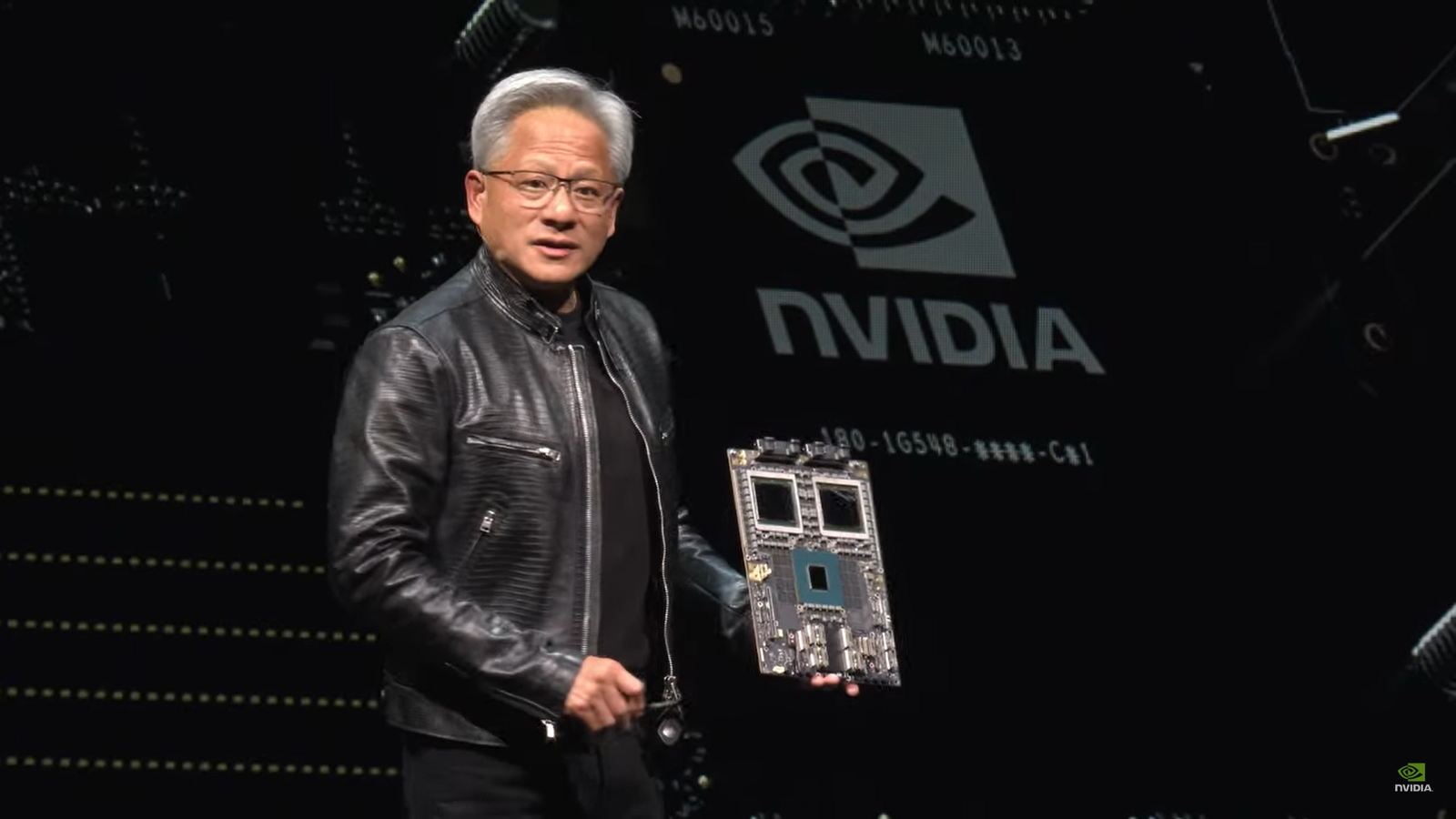

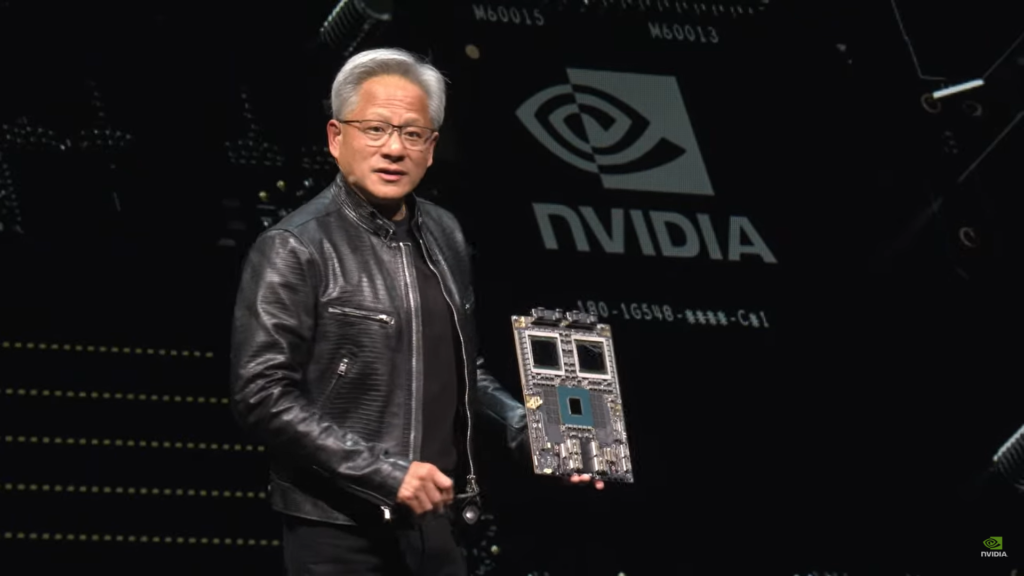

Nvidia has unveiled its next-generation artificial intelligence (AI) platform, codenamed “Rubin,” slated for release in 2026. This announcement comes shortly after the company introduced its current AI chip platform, “Blackwell,” which is yet to hit the market. Nvidia’s CEO, Jensen Huang, revealed the company’s plans to upgrade its AI accelerators annually, showcasing Nvidia’s commitment to staying ahead in the rapidly evolving AI landscape.

Key Takeaways:

- Rubin AI platform to launch in 2026, featuring new GPU, CPU, and networking chips

- Blackwell Ultra chip scheduled for 2025 release

- Nvidia aims to upgrade AI accelerators yearly, accelerating product cycles

- Rubin GPU to incorporate HBM4 (High-Bandwidth Memory 4) technology

- Nvidia’s strategy to maintain market dominance in AI chip manufacturing

Accelerating Product Cycles

During his keynote address at the Computex trade show in Taiwan, Huang announced that Nvidia would release new AI chip technology annually, a significant acceleration from the previous two-year cycle. This move underscores Nvidia’s determination to maintain its position as a leading AI chip manufacturer and a top-tier global corporation.

Rubin AI Platform: The Next Generation

The Rubin AI platform, set to debut in 2026, will feature a new graphics processing unit (GPU), an Arm-based central processing unit (CPU) named “Vera,” and advanced networking components such as NVLink 6 and CX9 SuperNIC. Huang revealed that the Rubin GPU would incorporate HBM4 (High-Bandwidth Memory 4) technology, a crucial advancement in addressing the memory bottleneck that has hindered AI accelerator production.

Blackwell Ultra: Bridging the Gap

Before the mass production of Rubin, Nvidia plans to release the “Blackwell Ultra” chip in 2025. This interim upgrade will provide a performance boost over the current Blackwell platform, which includes the B100 AI chip capable of processing data 2.5 times faster than its predecessor, the H100.

Intensifying Competition

Nvidia’s aggressive product roadmap is expected to significantly impact the domestic semiconductor market, particularly in the realm of high-bandwidth memory (HBM) technology. Samsung Electronics and SK hynix are focusing their efforts on developing and mass-producing HBM4 to coincide with the launch of Rubin.

Meanwhile, Nvidia’s primary competitor, AMD, is not idling. During the same Computex event, AMD CEO Lisa Su unveiled the next-generation AI accelerator “AMD Instinct MI325X,” likely to be equipped with Samsung’s HBM3E memory, indicating a collaborative effort between Samsung and AMD to counter the “Nvidia-SK hynix” alliance.

A Calculated Risk

Nvidia’s decision to reveal Rubin so soon after announcing Blackwell is a calculated risk, as it could potentially cannibalize sales of the current platform. However, Huang expressed his belief that the intersection of AI and accelerated computing holds the potential to revolutionize the future, justifying the company’s aggressive product roadmap.

Conclusion

Nvidia’s unveiling of the Rubin AI platform and its commitment to annual chip upgrades demonstrate the company’s determination to stay ahead in the rapidly evolving AI landscape. With competitors like AMD and Intel also vying for a slice of the lucrative AI chip market, the race to develop the most advanced and efficient AI accelerators is heating up.

Nvidia’s calculated risk in revealing Rubin so early could pay off if the company can deliver on its promises of cost and energy savings, solidifying its position as a market leader in the AI revolution.